Chat: The Journey From A Feature To become A SaaS Solution

SaaS, short for software as a service, has become increasingly popular as a cost-effective solution for businesses. By eliminating the need for upfront investments in hardware and software infrastructure, companies can significantly reduce their IT expenses. Moreover, SaaS offers scalability and automatic updates, allowing businesses to easily adapt and grow without the hassle of managing complex systems. This enables organizations to focus more on their core competencies and strategic initiatives, ultimately driving productivity and efficiency. So how to build up a SaaS solution inside a product company? The article may provide you with some points of view on the answer

The Problem

In 2016, when our business was in its early stages, our chat feature played a vital role in facilitating communication between buyers and sellers alongside traditional methods like phone calls and SMS. As our organization (Carousell group) rapidly scaled across multiple countries and business units, including Chotot in (the leading c2c market in Vietnam), where we served over 1 million monthly active users and witnessed approximately 440k daily exchanged messages across more than 70k new channels, we recognized the need to optimize operational costs, standardize the chat experience, and ensure scalability. To address these requirements and empower micro business units, we made the strategic decision to transform our chat feature into a robust SaaS system.

To meet our growing needs, we set out to develop a chat system with specific objectives in mind.

- Scalability: We aimed to build a system capable of seamlessly scaling to accommodate a minimum of 10 million monthly active users. This included ensuring that the system could handle at least peak connections of approximately 100k, guaranteeing a smooth and uninterrupted chat experience even during periods of high traffic.

- Performance: Our focus was on maintaining high-quality performance with an API response time consistently below 100 milliseconds. This would ensure that users could enjoy near-instantaneous interactions, fostering real-time conversations and enhancing overall engagement

- Integration: We recognized the importance of providing easy integration with client software development kits (SDKs). This would enable developers to seamlessly incorporate our chat system into their own applications, saving them time and effort while ensuring a seamless user experience.

- Analytics: We understood the value of data and its critical role in driving informed decision-making. With this in mind, we sought to optimize the chat system's design to enable efficient consumption and analysis of chat data for valuable insights and performance monitoring.

Legacy System

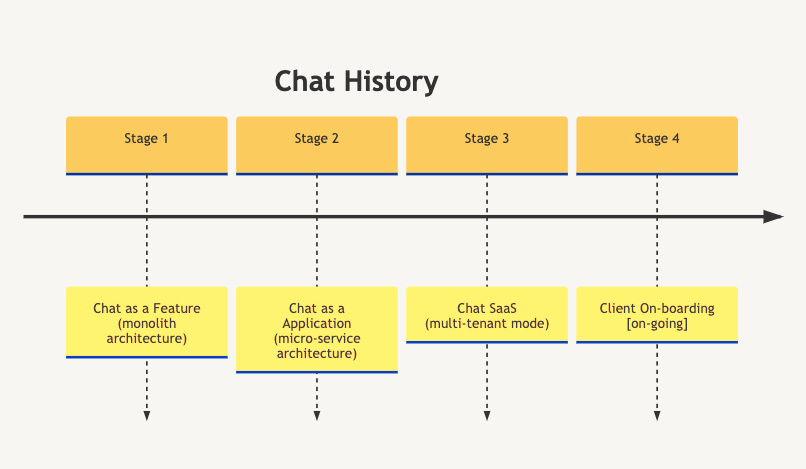

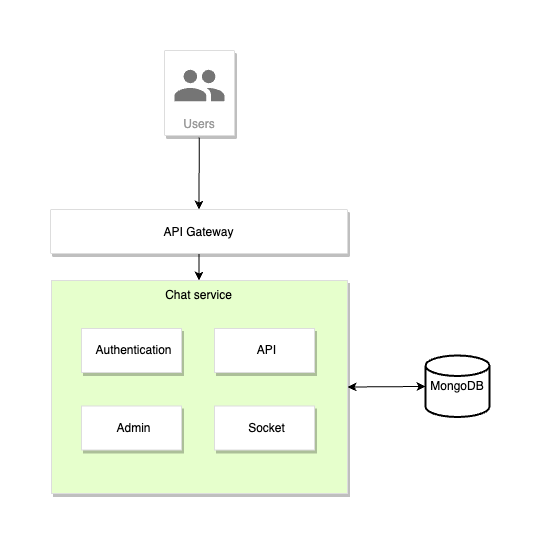

During stage 1 (Legacy), we utilized a tech stack consisting of Node.js, MongoDB, and socket.io, following a monolithic architecture approach. In this setup, a single Node.js service was responsible for handling various tasks such as authentication, API handler, and socket handler. MongoDB was selected as the database solution because it was well-suited for the scale we required at that time while offering efficient performance. Besides that we have multiple services around the chat service to fulfill our business requirements can be both costly and complex to maintain. It takes many costs and is complex to maintain

While the chosen architecture was simple to maintain, it posed challenges in supporting a stable core chat experience and the ability to scale effectively to meet business needs.

New Architecture

In stage 2 of our development process, we focused on three key actions: changing the tech stack, transitioning from a monolithic to a microservices architecture, and migrating our infrastructure from on-premise to the cloud.

In our pursuit of modernizing our technology stack, we made a deliberate choice to adopt the widely adopted Go language for our backend development. The decision to embrace Go was not only influenced by its widespread acceptance in the industry but also by its growing popularity within our own group.

Inspired by the achievements of industry big companies like Discord, who have demonstrated success in managing trillions of messages, we turned to ScyllaDB as our database solution. Built upon the battle-tested foundation of Apache Cassandra, ScyllaDB offered us the scalability, fault tolerance, and high availability required to handle the massive volume of data generated by our chat system. Its distributed architecture and ring architecture empowered us to store and retrieve chat messages, user information, and other critical data with high efficiency and reliability.

Recognizing the significance of real-time communication in our chat product, we continued to leverage the power of Socket.io. Renowned for its ability to facilitate seamless two-way interactive communication sessions, Socket.io proved to be a good choice for our real-time communication needs. It enabled us to create dynamic and responsive chat experiences, allowing users to exchange messages in real time, receive typing indicators, and track message-read status effortlessly.

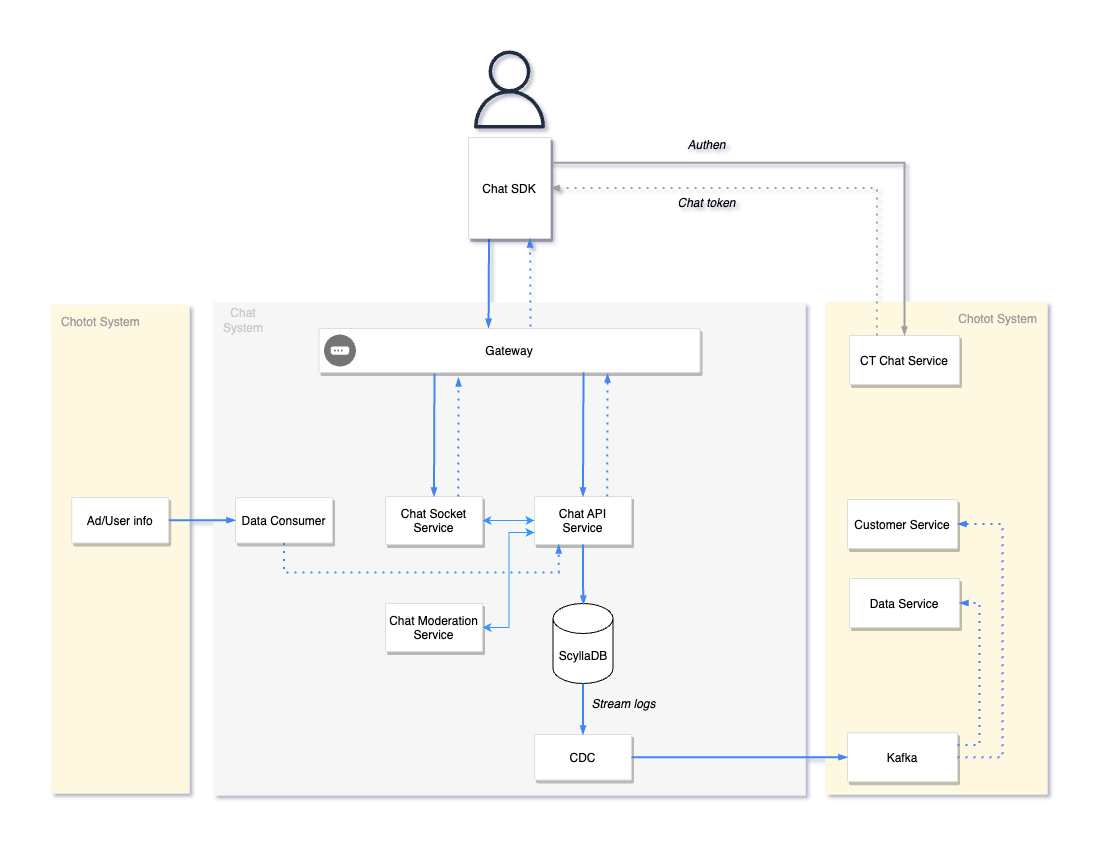

During the re-architecting process, we implemented a three-zone approach to separate and optimize our chat system. In the first zone, we developed services that actively collected data or listened for data changes from our clients, such as new user registrations or inserted ads. Moving to the second zone, we focused on crucial components including an API service to handle core logic, a moderation service for pre-processing messages, a Socket service implemented in Node.js to manage socket connections and handle chat events, Redis for efficient caching of frequently changing data, and ScyllaDB as our robust storage solution for channels, messages, and user information. The final zone encompasses back-office and analytic tasks like message logs and user sessions, which are handled through a CDC (Change Data Capture) service that streams database logs to the client system. By dividing the chat system into these distinct zones, we improved scalability, maintainability, and overall performance while ensuring optimal handling of user interactions and data management.

The flow of the chat ecosystem can be described through the following steps:

- When a user creates an account on the Chotot system,

- The Chotot system triggers an event about the account creation, which is sent to a message queue (Kafka).

- The core chat consumer captures this event and calls the Chat API service to create a corresponding account in the chat system.

- When the user logs in to Chotot and visits the chat page, they are provided with a chat access token in addition to their Chotot access token.

- The Chat SDK uses the chat access token to authenticate with the backend system, establishing a connection between the client and the server.

- Users can create channels through a RESTful API and send messages through the socket connection.

- All user actions, such as messaging and channel creation, go through a moderation process. If any content violates the rules, it is immediately dropped before reaching the main processing.

- Events occurring during the user's journey are stored in the database. A CDC service captures the database changes and triggers events sent to the message queue (Kafka).

- The Chotot system consumes these events from the queue for various tasks such as data analytics and user logs.

As part of our infrastructure migration to the cloud, we embraced a strategic decision to shift from on-premise to cloud-based solutions. This transition, leveraging GKE for deploying our main services and GCE for storage, provides us with enhanced scalability and flexibility, aligning with our organization's overall strategy.

In this stage, after carefully considering factors such as resource allocation, timeline, and existing infrastructure, we made the strategic decision to deploy the system in single-tenant mode, this means each client will deploy their own infrastructure and separate data. we just use same the architecture and the source code.

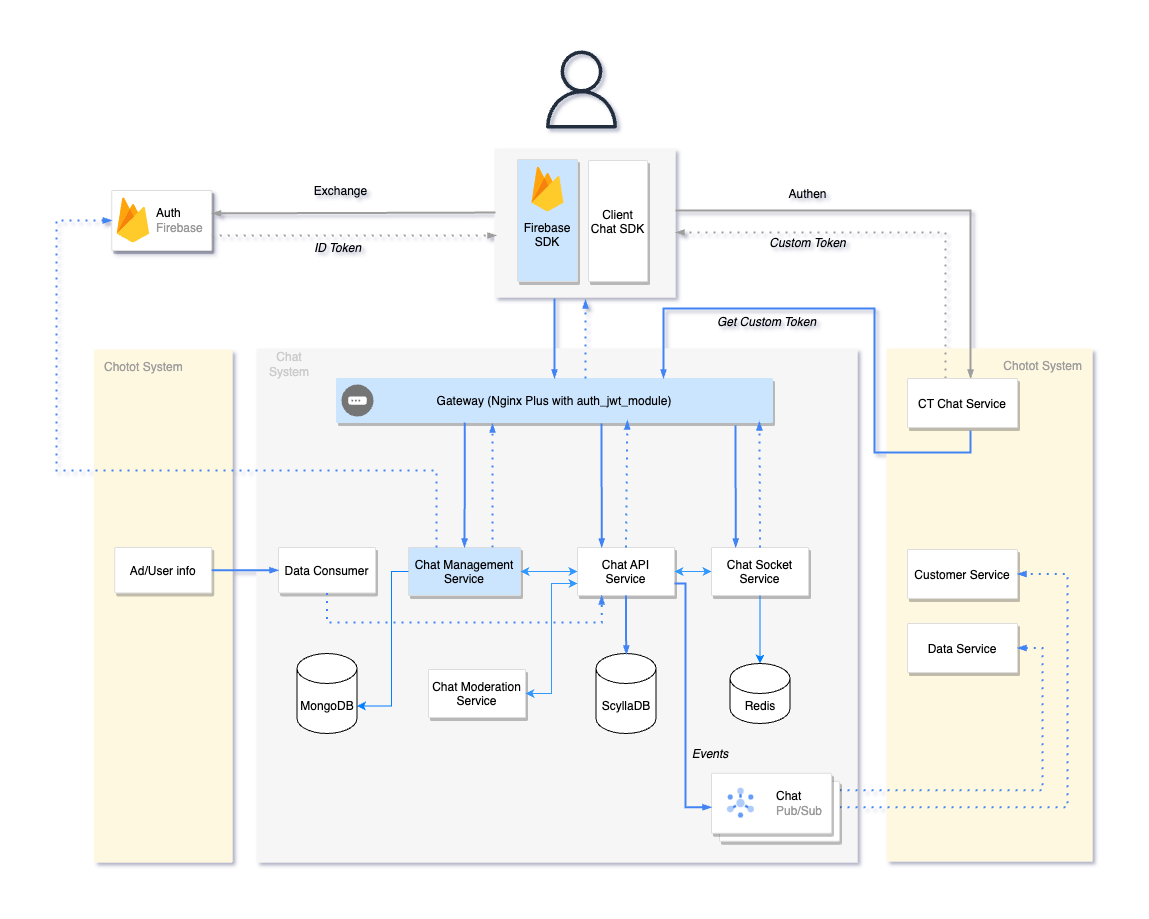

Enhanced For Multi-tenant

Upon reflection on our operations in stage 2, we recognized that our existing single-tenant approach did not meet our expectations. The setup process for new business units (BUs) or clients was laborious, resulting in inconsistent experiences and performance due to separate deployments. To overcome these challenges, we determined that transitioning to a multi-tenant mode, which aligns with SaaS best practices, was necessary. This involved refactoring our database schema and authentication mechanism to accommodate multiple tenants within a single system, providing a more efficient and streamlined experience for our clients.

In terms of the database schema, we introduced a new field called "projectID" to differentiate each client within the system. For authentication management like providing tokens, and refresh tokens… we utilized FirebaseAuth and implemented follow custom mechanism to integrate with our authentication system. Additionally, we introduced a new service called the management service, responsible for handling authentication and communicating with the Firebase server. By utilizing the JWT authentication module on Nginx Plus, we centralized the authentication validation at the gateway layer, eliminating the need for individual services to handle authentication requests. This approach not only simplifies the authentication process but also improves the overall performance and security of our system.

For the migration plan, we adopted a strategy of running a dual system in parallel, referred to as versions 3.0 and 3.1. Here are the key steps we followed:

- The existing system continued to serve the 3.0 application and internal ecosystem.

- We took a snapshot of the 3.0 Scylla cluster.

- The entire 3.1 system, including the 3.1 Scylla cluster, was deployed on the Google Cloud Platform (GCP).

- A worker was implemented to synchronize data between the two systems. This allowed us to revert to the 3.0 system in case of critical errors during the release.

- A new gateway domain was set up for the 3.1 system.

- The release started with the web application.

- We subsequently published a new version of the mobile app that was compatible with the 3.1 system.

This migration presented a significant challenge, as we needed to ensure seamless data migration and concurrent system operation. Since modifying the existing mobile applications was not feasible, we determined the sunset point for the old system based on a predefined threshold of decreased adoption among old app versions on each platform, which was set to less than 2%. We can’t force app updates, which would not be feasible for many old app versions and have not user-friendly. Three months after the release of version 3.1, we successfully fulfilled the necessary conditions for the new app version adoption and proceeded to shut down the entire 3.0 system.

During releasing time, as expected with such a major overhaul across multiple platforms, the number of reported bugs increased by 20% during the release and subsequent period. However, we swiftly tackled these minor bugs, promptly addressing and resolving each one.

After completing these stages, we successfully achieved our key results:

- By migrating from the legacy system and implementing the new architecture, we managed to significantly reduce infrastructure costs. We went from spending $5,000 to approximately $2,500, resulting in a 50% cost reduction based on our actual usage.

- Within one-quarter of the release, we observed a remarkable improvement in user-reported issues related to crashes and delays. The number of reported incidents decreased by approximately 70%, indicating enhanced stability and performance in the system.

- We introduced various business features such as auto-reply messages, message templates, and location sharing. The remarkable aspect is that these features were implemented without making any modifications to the core chat system.

Conclusion

The current system is capable of handling peak traffic connections of 20,000 and processing over 10 million API requests per day, our system maintains an API response time of less than 100ms. The ScyllaDB cluster, consisting of three nodes, exhibits excellent performance with 99th percentile write latencies below 5ms and read latencies below 15ms. Service level agreement (SLA) ensuring that 99.9% service availability in a production environment in a given month. And the most important thing is our system can continuously scale and rely on the business growth

Currently, we are in the process of onboarding the next client within our group. Setting up a new client takes approximately 30 minutes, but the migration and integration stages require significant time and effort. We thoroughly explore the client's system and workflow to collaboratively develop a comprehensive migration plan.

Although this overview does not delve into technical details due to the complexity of the transformation process, I hope it provides a general understanding of the steps involved. I plan to cover each technical aspect in separate articles, offering more in-depth information on each topic.

Last but not least, I‘m just a “cheerleader” for this project. We cannot achieve our goals without the collective contribution of each member of my team!