AI Blog #4: Building AI Products with Workflow: Lessons from Our Technical Journey

Introduction: From Idea to Real Users

In our recent project, we built and shipped AI Job Smart Screen – an AI-powered tool designed to help recruiters screen job applications more efficiently.

The product integrates AI into the recruitment flow by automatically analyzing candidate profiles, highlighting key qualifications, and reducing manual effort for hiring teams. This was Vertical first AI product, and it’s already in the hands of real users.

Looking back, the most valuable takeaway is how a workflow-based approach helped us navigate the complexity of building AI for the first time.

In this blog, I’ll share:

- Why we chose workflow as the backbone of our AI solution.

- The technical process of implementing an AI product—from requirement to solution.

- Practical tips on using AI to speed up coding, debugging, and architectural decision-making.

- How prompt design and self-consistency improved our outputs.

- The role of engineers in steering AI-powered development.

- Lessons learned on accelerating delivery without needing deep AI expertise upfront.

Case Study: Our Technical Journey

1. The Challenge & Solution Direction

This was Vertical first AI product, and the team lacked deep AI experience. The challenge: how do we move fast, ensure reliability, and keep the system maintainable?

We decided to adopt a workflow-based approach because:

- The problem followed a clear chain of steps (A → B → C …).

- We needed repeatability, traceability, and maintainability.

- Workflow made logic transparent and easier to debug.

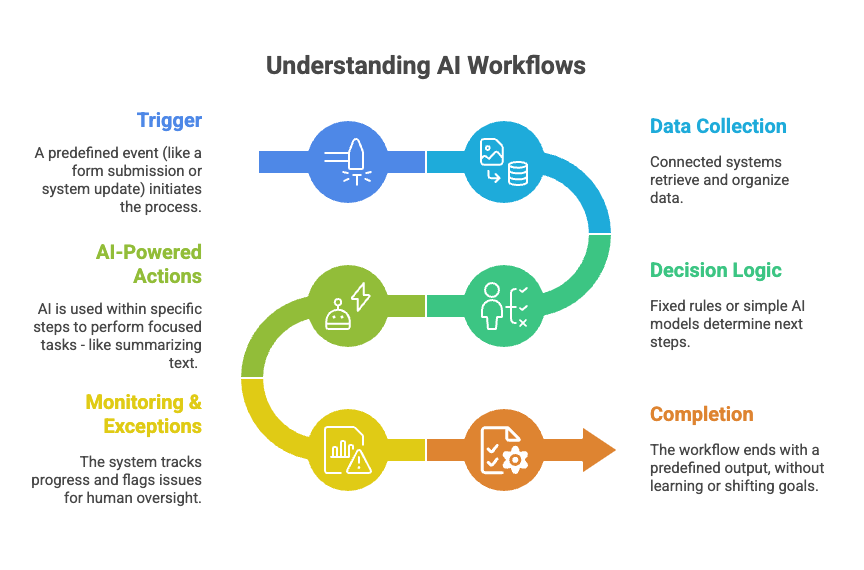

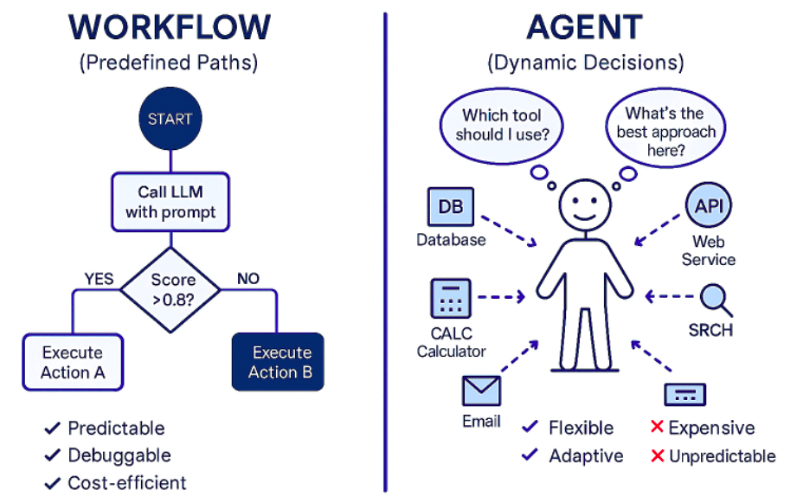

Looking at the comparison:

- Workflow relies on predefined paths. It’s predictable, debuggable, and cost-efficient—perfect for problems like screening candidates where the steps are clear and repetitive.

- AI Agents, by contrast, make dynamic decisions such as “Which tool should I use?” or “What’s the best approach here?”. This makes them flexible and adaptive, but also expensive and unpredictable.

For AI Job Smart Screen, predictability and cost-efficiency mattered more than flexibility. We didn’t need an agent exploring different tools or improvising solutions—we needed a system that consistently followed a defined process, gave traceable results, and was easy to maintain.

Instead of chasing trendy technologies, we focused on building an efficient, fit-for-purpose workflow.

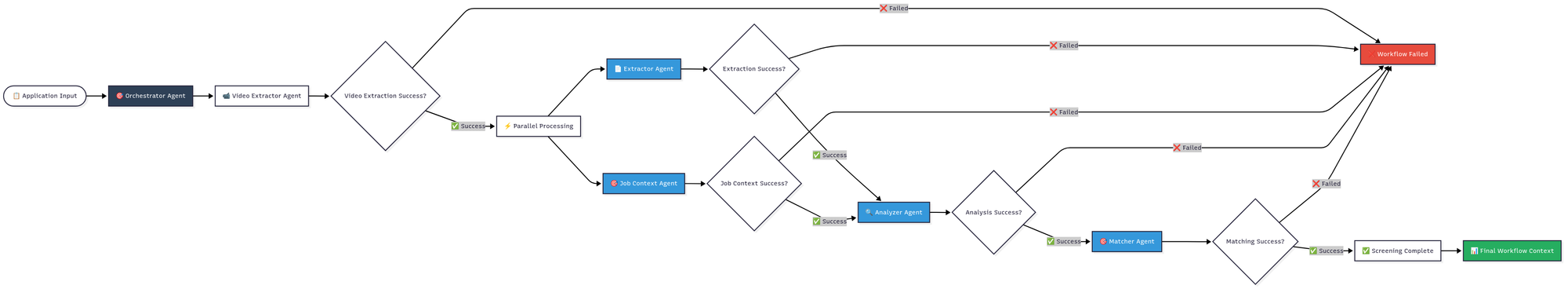

2. Workflow Implementation

Designing the workflow involved:

- Defining step inputs and outputs clearly.

- Introducing an orchestrator to manage execution.

- Adding retry and fallback mechanisms for resilience.

We explored different frameworks and tools before choosing the stack that balanced scalability with flexibility. With workflow, we could:

- Scale parts of the system independently.

- Adjust logic easily when requirements changed.

This flexibility proved essential, as AI product requirements evolve rapidly.

3. Using AI in Coding & Debugging

AI played a critical role as a pair programmer:

- Code generation: Instead of starting from zero, we used AI to generate initial code snippets, speeding up delivery.

- Logic checks: AI helped verify edge cases and suggested libraries or algorithms.

- Debugging: By pasting error logs, we let AI analyze root causes and propose fixes. This saved hours compared to manual trial-and-error debugging.

💡 Tip: Always ask AI to explain its reasoning when debugging. This not only fixes the issue but also improves team understanding .

4. AI as a Consultant for Solution Design

Beyond coding, AI served as a consultant when we were stuck:

- We asked for patterns, architectures, and best practices.

- Compared multiple approaches side by side before making a decision.

- In one case, AI suggested simplifying our workflow steps to reduce complexity—an idea we later adopted successfully.

This “consulting role” of AI allowed us to move quickly without waiting for external expert reviews.

5. Prompt Design & Tips

One critical factor behind the quality of outputs in AI Job Smart Screen is prompt design. Since our team had limited AI expertise at the start, prompt engineering became a new kind of programming skill.

Our approach to prompting:

- Clear & structured prompts: Instead of vague requests, we provided detailed instructions with explicit input/output formats.

- Workflow-driven prompting: We broke down complex logic into multiple workflow steps, each with its own prompt, instead of packing everything into a single one.

- Instruction + context: Always combined “what to do” (task instruction) with “context” (rules, data, or examples) to reduce hallucinations.

Practical tips we learned:

- Iterate & test multiple prompts → use AI itself to generate prompt variants, then A/B test them.

- Role play setup → e.g., “You are an HR assistant…” makes the AI more context-aware.

- Enforce structured outputs → e.g., “Return the result in JSON with fields: score, reasoning, and risk factors.”

- Treat prompts like code → version them, test them, and review them regularly.

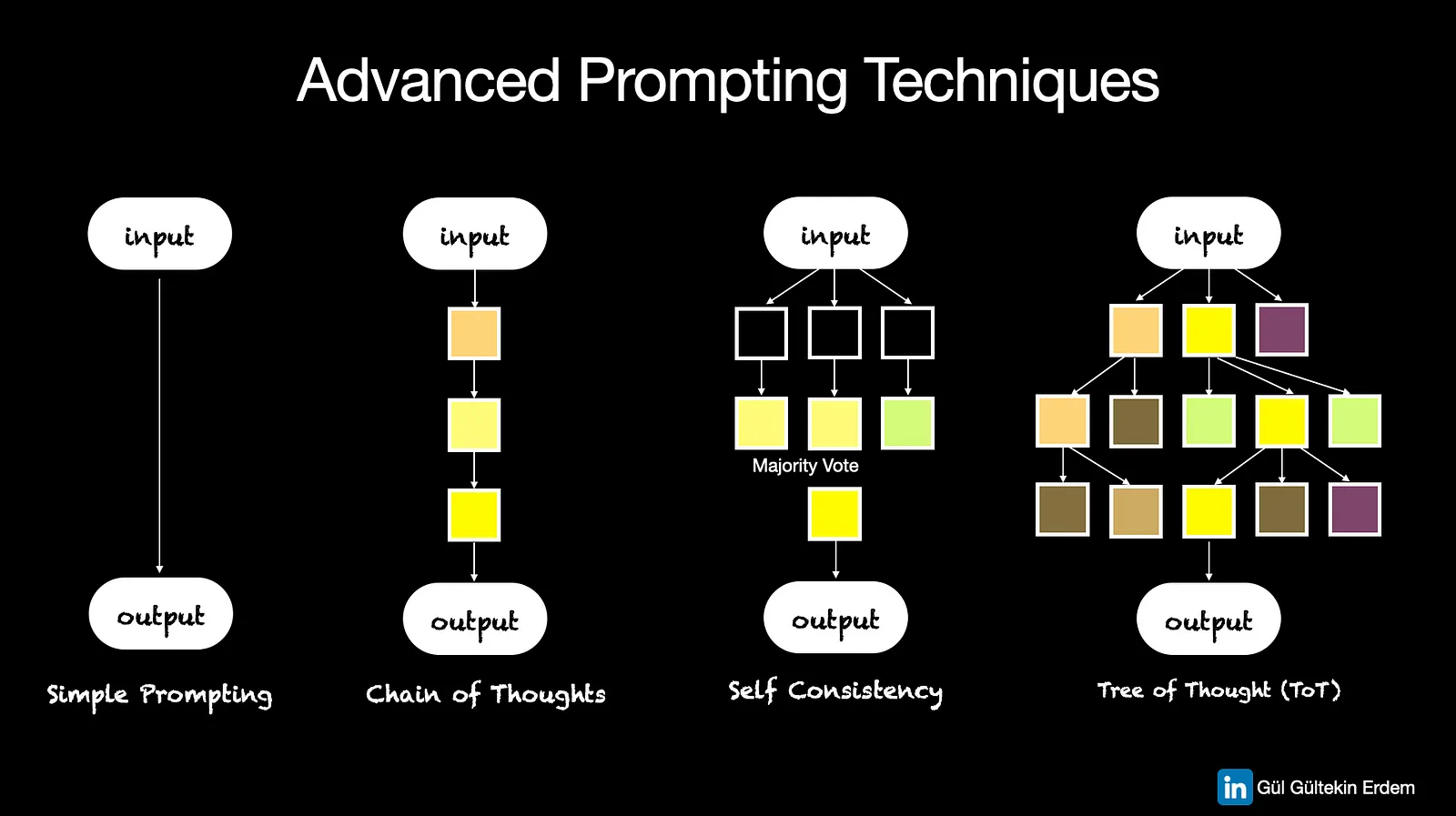

Self-Consistency for Better Results

One advanced prompting technique we found valuable is self-consistency. Instead of relying on a single response from the AI, we generate multiple reasoning paths and then aggregate the answers.

For example, when scoring a candidate’s CV, the AI may provide slightly different reasoning each time due to randomness in the model. By running the same prompt multiple times and consolidating the answers (e.g., majority vote or averaging the scores), we achieve:

- More reliable outputs (less variance across runs).

- Reduced risk of “lucky” or “unlucky” answers.

- Closer alignment with human judgment in subjective tasks.

💡 Tip: Use self-consistency when the task involves reasoning or evaluation. It’s more resource-intensive, but for critical steps in the workflow (like candidate scoring), the payoff in reliability is worth it.

6. The Role of Engineers in an AI-Driven Workflow

While AI tools played a big part in accelerating our development, it’s important to emphasize that engineers remained in full control of the process.

AI supported us as a:

- Code assistant → generating snippets or suggesting alternatives.

- Debugging helper → analyzing error logs and proposing fixes.

- Solution consultant → surfacing design patterns or best practices.

But the engineer’s role was never replaced. Instead, engineers acted as:

- Researchers → exploring frameworks, evaluating AI suggestions, and identifying trade-offs.

- Planners → designing the workflow structure, defining retry/fallback strategies, and scoping prompts.

- Experimenters → testing multiple prompts, running comparisons, applying self-consistency.

- Decision-makers → validating AI-generated outputs, rejecting poor solutions, and making the final architectural choices.

In short, AI accelerated the journey, but engineers steered the direction. The product quality relied not just on AI’s capabilities, but on our continuous iteration and human judgment to refine prompts, workflows, and logic until the product was robust enough for real users.

Lessons Learned

- Workflow is ideal when requirements are clear, repetitive, and need transparency.

- AI accelerates development by helping in three dimensions: coding, debugging, and consulting.

- Prompt engineering is just as important as coding—treat prompts like versioned assets.

- Self-consistency improves reliability, especially in reasoning-heavy tasks like candidate evaluation.

- Engineers remain in the driver’s seat: AI is a tool, but humans are responsible for planning, experimenting, and making final decisions.

- Even with limited AI expertise at the start, a team can move fast by strategically leveraging AI tools.

- Collaboration with stakeholders and thoughtful workflow design.

Conclusion

By combining a workflow-first mindset, solid prompt design, and AI-assisted development, we delivered a production-ready solution in record time.

For teams exploring AI for the first time, the key is simple:

- Use workflow to structure complexity.

- Use AI as both a builder and a consultant.

- Design prompts carefully and apply self-consistency for reliability.

- Focus on efficiency and adaptability over trends.