AI Blog #3: AI Agent Chatbot - From Practice to Real Application

AI conversational technology has gone through a remarkable transformation. What once began as simple chatbots that followed predefined scenarios has quickly evolved into a new era driven by Large Language Models (LLMs) and advanced AI agents technique—the system that can reason, remember, and act on their own.

But how does this revolution translate to the real world, and what does it mean for a classified platform like Chotot? To answer these questions, we have experienced and adopted AI Agents to our real application. Our journey with AI Agents wasn’t just about following a trend; it was about finding better ways to help our customers and business smoother in a rapidly changing landscape.

In this blog, let we share our real-life journey from design to develop AI agent chatbots in order to handle all sorts of business needs. Finally, we would like to share the real results and impact we’ve experienced by bringing AI Agents into our daily operations.

Why AI Agents Chatbot ?

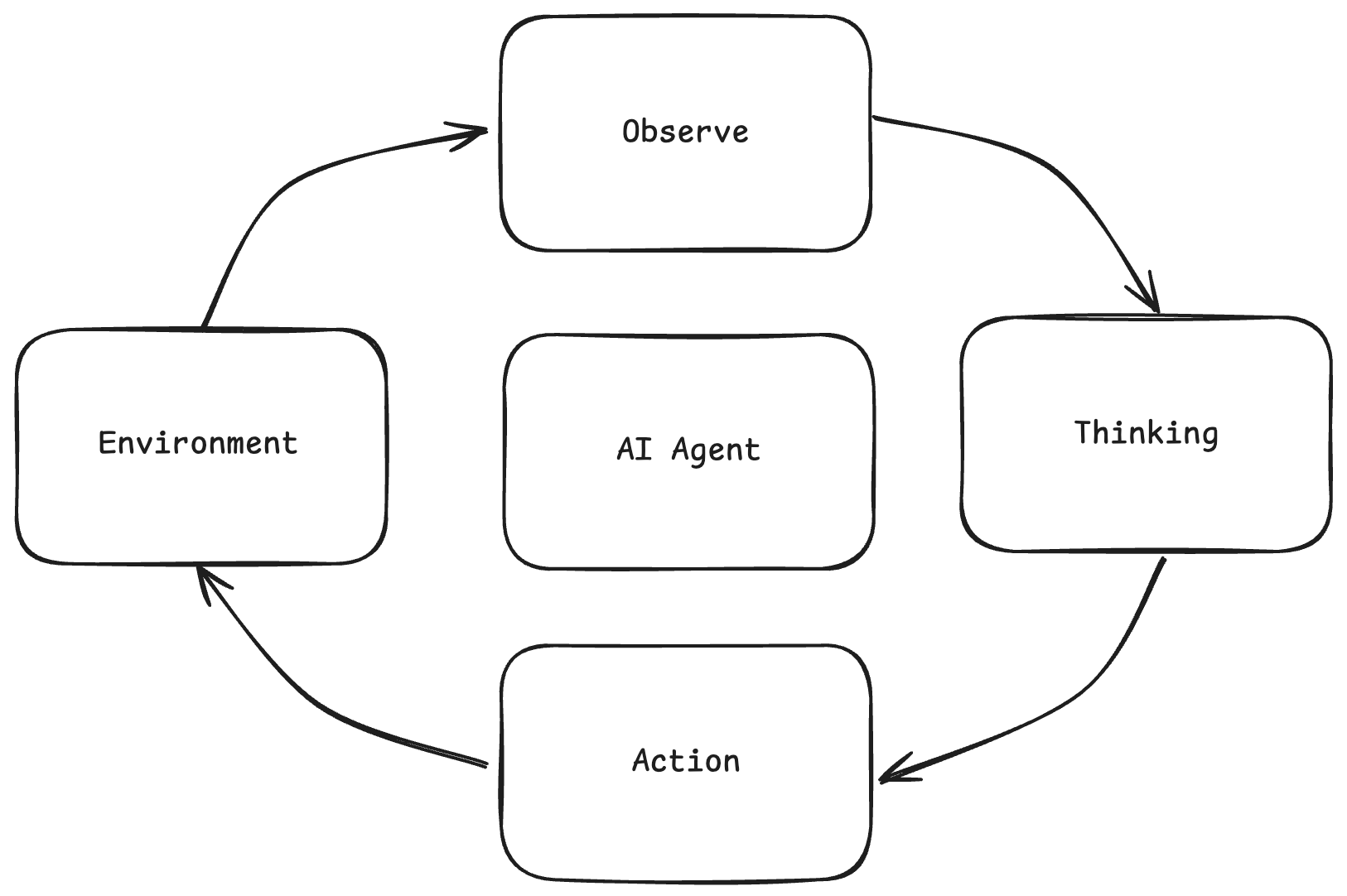

Before diving into the details of Chotot’s chatbot system, it’s necessary to understand key differences between the traditional chatbot and the new generation of AI agents. They have shown that why smart chatbots today can transform customer experience and automate business operations.

Traditional chatbots rely on rigid scripts and fixed response templates, staying within the box of simple conversations. On the other hand, recent AI agent break through those limitations: it's designed to reason independently, handle multi-turn goals, and adapt to each user’s journey. That’s how AI Agent can provide the support beyond “just chatting”.

Below is a quick comparison that highlights these transformation points:

| Aspect | Traditional Chatbots | AI Agents |

|---|---|---|

| Core | Fixed decision trees and response templates | Autonomous reasoning and execution |

| Action Ability | Responds only to queries | Can perform multi-step actions and tasks independently |

| Approach to Goals | Reacts to single user input | Actively works to resolve complex, multi-step goals |

| Adaptability | Limited context awareness | Adaptive, uses memory and learns from interactions |

| Complexity Handling | Can’t process complex tasks | Handles complex tasks from user interaction |

Enhance Our Users Experience with AI Agents

Now that we’ve explored what sets modern AI agents apart from traditional chatbots, let’s look at the real impact for Chotot’s users. In the next sections, you’ll discover how we design and operate our AI Agent Chatbot systems—and how these innovations are enhancing user satisfaction, boosting successful transactions, and creating meaningful value for each user on our platform.

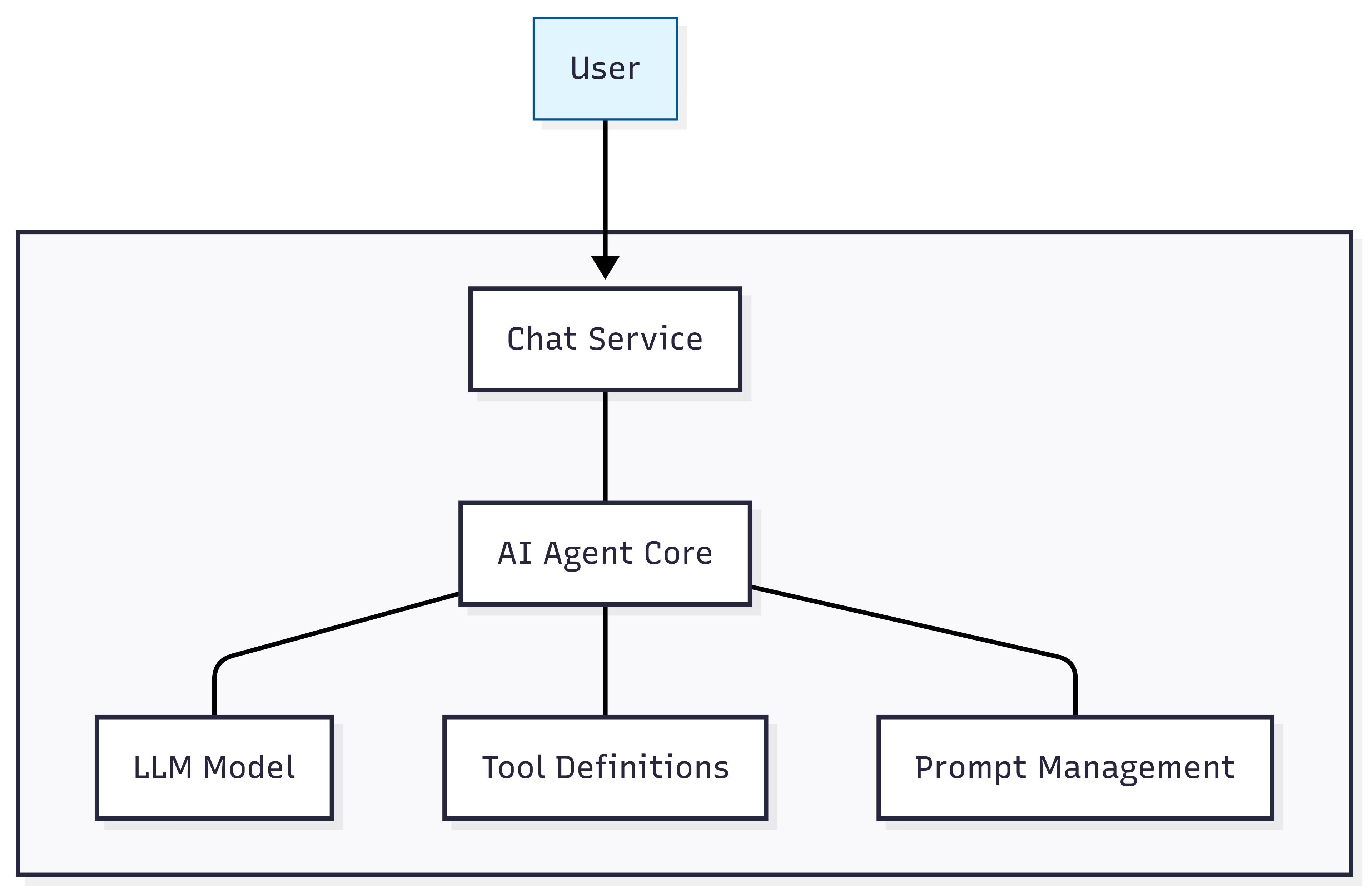

From All-in-One Approach

We kicked off our AI Agent journey with a monolithic design—packing everything into a single system. The chat service, LLM model, tool definitions, and prompt management were all built together as one unified block. This all-in-one approach worked well for our MVP, quickly proving that AI Agents could genuinely help Cho Tot users with their questions and tasks.

But as the growth of Chotot's business and our user's expectations became more diverse, this “one-size-fits-all” solution couldn’t keep pace with the growing need for domain-specific intelligence—like dedicated chatbots for cars, motorbikes, and property domains. Each business vertical demanded its own specialized logic and features, making the monolithic system difficult to adapt and scale. That’s when we should change the architecture of chatbot in order to solve these problems.

Transitioning to Orchestrator-Centered Design

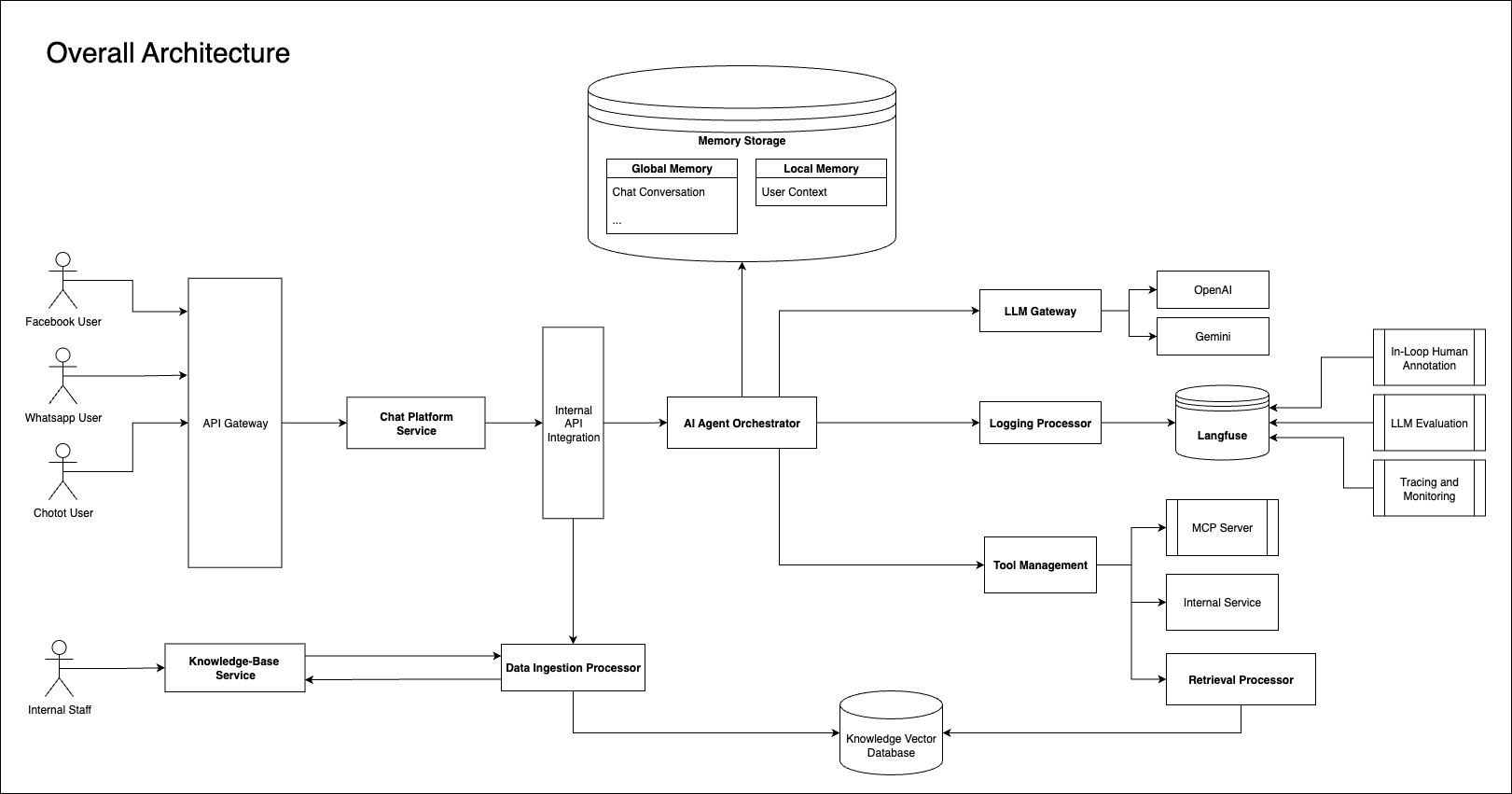

As Chotot’s vision for AI Agents evolved, we realized that true success would hinge on three key qualities: flexibility, seamless integration, and effortless extensibility. This inspired a major shift in our architecture—moving away from one big, bundled system to an orchestrator-centered design, with the AI Agent Orchestrator as the beating heart of our platform.

Instead of locking all thing together, we broke our chatbot into separate, modular components—each handling its own job: chat interfaces, agent tool management, LLM routing, logging, prompt management, and more. Like building with Lego bricks, these modules can be assembled, swapped, and scaled to match ever-changing business needs.

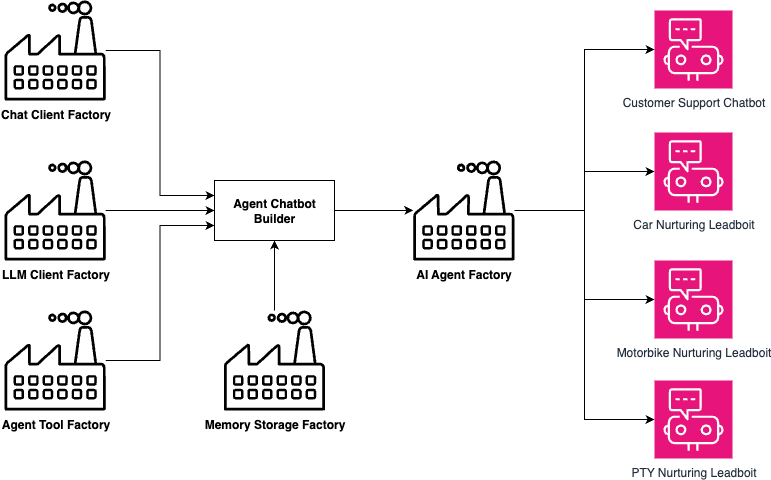

To bring true modularity to system, we adopted the Factory design pattern for every module connected to the AI Agent Orchestrator (see Figure 4). By now, this architecture can empowers us to easily tweak, upgrade, or replace individual components—so when a new business domain or requirement emerges, we can quickly adapt any part of the system without disrupting anything else.

Although the scaling problem was solved, a new challenge has been quickly appeared. We found that our chatbot still struggled with long-running conversations—often losing track of important details or missing out on information critical to a successful sale. Relying solely on chat history simply wasn’t enough. If a user changed their mind mid-conversation, asked a particularly complex question, or came back hours later, our bot might lose the thread and fail to provide meaningful support.

To overcome this, our AI agents had to be able to remember, learn, and personalize each interaction. That’s why we built a multi-layered memory storage so that every agent can have ability to adapt to every user’s journey. This approach ensures our agents deliver smarter, more relevant, and truly helpful experiences—no matter how complex or drawn-out the conversation becomes.

Giving Agents a Memory: Layered Context for Smarter Conversations

To overcome the aforementioned problem, we introduce two kinds of memory:

- Long Term memory stores universal knowledge and high-level summaries, helping agents maintain context across sessions.

- Short term memory keeps track of the ongoing thread, ensuring smooth continuity within a single interaction.

By this memory approach, the chatbot became far more consistent and accurate, delivering relevant responses. No matter how long the conversation lasts, our agent could reference important past details, recognize recurring needs, and draw on the collective history of conversations on each user's question.

LLM Gateway Integration

With the memory challenge addressed, the next necessary decision was choosing the right LLM model for our AI Agents. To ensure maximum flexibility and resilience, we didn’t want to rely on a single LLM provider. Instead, we consider an LLM gateway that integrates directly with the AI Agent Orchestrator.

This gateway allows our AI agents to switch effortlessly between different LLM vendors—or even self-hosted models—based on performance needs, cost, or availability. The LLM Gateway acts as our smart router, letting us flexibly choose the best LLM vendor (like OpenAI, Gemini, or even self-hosted models) for every situation. If one model is optimized for fast, everyday questions, we direct those queries there; if another offers deeper reasoning or domain-specific expertise, we can switch seamlessly as needed.

Moreover, this design doesn’t just optimize quality and cost, it keeps our service resilient: if one provider experiences problems or downtime, our agents can be instantly rerouted to another, avoiding any single point of failure and guaranteeing smooth, uninterrupted service for our users.

Tool Integration and Management

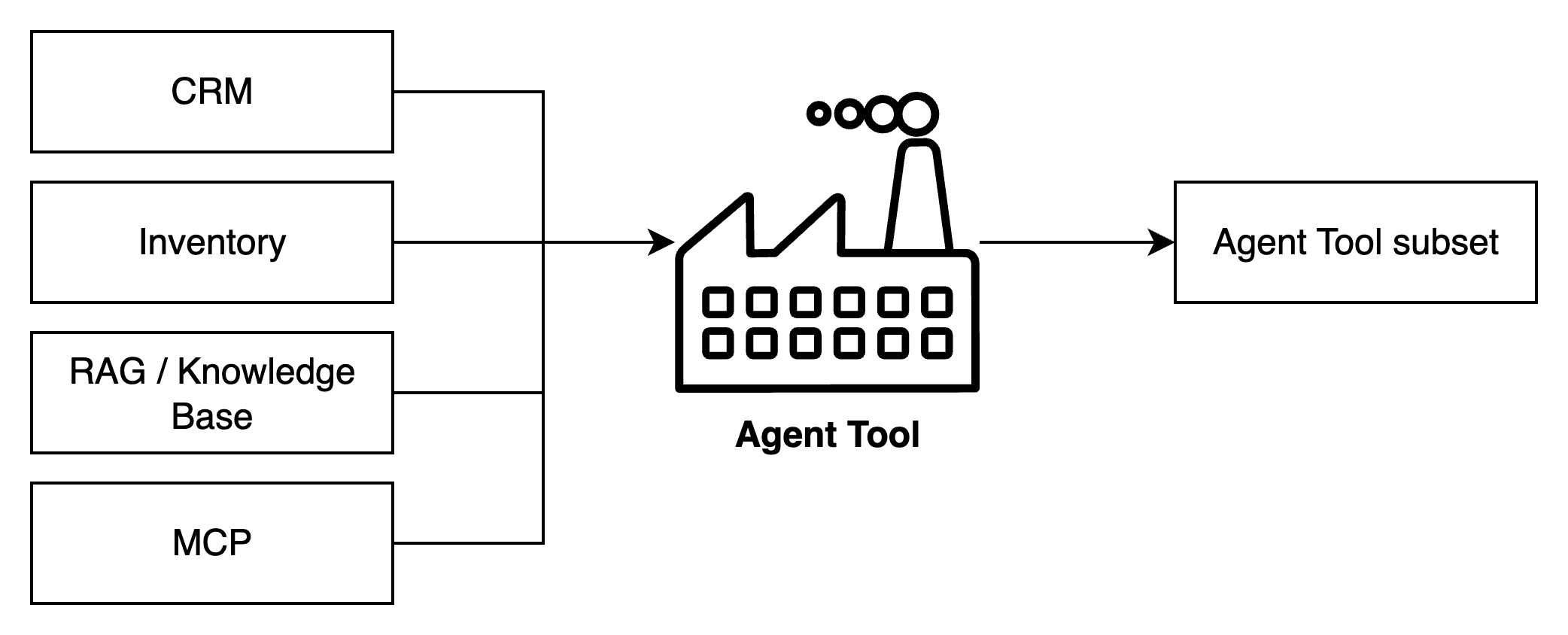

With flexible LLM model routing now in place, our AI agents gained the ability to tailor their intelligence for every customer scenario. However without real-world action, practical business value would be limited. To unlock it, our agents need more than brainpower—they need hands-on access to the tools that power daily operations. That’s where Agent Tool Factory comes in (Figure 5).

The Agent Tool Factory lets us define and assemble a diverse toolbox—from classic integrations like CRM and inventory, to cutting-edge protocols such as MCP and powerful retrieval systems like RAG. Whenever a new business need or external service arises, we simply generate the right tool subset and plug it in.

Evaluation and Monitoring

Of course, giving our agents advanced capabilities is only half the story—ensuring they perform reliably and transparently is just as crucial. That’s why we integrated Langfuse as our LLM application monitoring framework.

Langfuse tracks every interaction in granular detail, logging inputs and outputs, monitoring latency and model costs, and managing prompt versions. Its built-in LLM-as-Judge evaluation means every agent response is scored for quality, factual accuracy, and contextual relevance.

This monitoring approach allows us to precisely trace which tools the agent used, how well it maintained conversation context, and whether knowledge retrieval was truly effective, giving us the insight needed for continual optimization and proactive troubleshooting.

Finally, these practices help to ensure that Chotot’s platform delivers not just information, but real action and trustworthy support for all users.

From practice to use case

Our strategy goes far beyond technical refinement—it delivers tangible, business-changing results for Chotot. After shifting to the new AI agent architecture, the improvements in our core metrics were unmistakable. Our Customer Support Chatbot now boasts a remarkable 91.2% accuracy, with coverage climbing to 50.7%. User satisfaction hit an impressive 84.2%, and the average response time dropped to just 6.6 seconds, creating effortless and timely support.

But the transformation didn’t stop there. When we applied AI agent technology to nurturing leads for electric motorbikes, the placed order rate surged by 43%, leaping from 7% to 10%—all driven by smarter engagement and tailored interactions. Perhaps the most striking achievement: the proportion of instant replies within five minutes shot up dramatically, from 17.8% to 60.3%, marking a 238% improvement that delivers real-time value to our users.

In the end, through the journey on building AI Agent chatbot for Cho Tot, we have answered the central question posed at the start of this blog: with thoughtful architecture and robust monitoring, AI agents fundamentally reshape business outcomes and elevate user experiences throughout the platform.

Conclusion

To wrap up our journey with AI Agents at Chotot, here are the key takeaways that have guided our progress:

- Declare carefully what AI Agent should do:

Set clear goals, boundaries, and responsibilities for each agent from the start. This focus keeps development on track and ensures each agent delivers real value. - Start from simple and make complex later:

Launch with straightforward use cases, then layer on complexity as you learn from users and real-world needs. This stepwise approach reduces risk and encourages steady growth. - Choose the suitable LLM model for each business domain:

Always match the language model to the unique requirements of every domain for the best balance of accuracy, speed, and efficiency. - Always monitor and add more evaluation processes to prevent unexpected situation:

Make ongoing monitoring and evaluation a habit. By tracking performance and quickly spotting issues, you can optimize agents continuously and provide consistent, reliable support.